Two of the most common steps in data wrangling, also known as the preparation of raw data for use in modelling or analysis, are data encoding and data transformation. But what sets them apart, and how can you save categorical characteristics with a large number of possible values? In this post, we’ll go over how to deal with high-cardinality categorical features in Python by discussing the theories and techniques of data encoding and data transformation.

Information Coding

Data encoding is the process of converting category variables into numeric values suitable for use in machine learning algorithms and statistical models. Categorical characteristics include things like skin tones, sexual orientations, and nationalities. Ordinal characteristics cannot have a natural order, although nominal features that have a natural order can also be ordinal characteristics. Colours are examples of nominal quantities, while ratings are examples of ordinal quantities. One-hot encoding, label encoding, and target encoding are only a few of the many methods of solving high cardinality that exist; these methods differ depending on the type of categorical features and the amount of possible permutations.

Altering the Information

Data transformation is the process of modifying a dataset’s numerical attributes, such as age, wealth, or height, to improve its suitability for analysis or modelling. Numerical features can be considered continuous if they span an infinite range of values, or discrete if they only span a finite range. Minimizing skewness, standardizing scale, and creating new features are just a few examples of the many uses for data transformation. There are a wide variety of techniques for transforming data that can be applied depending on the shape and scale of the numerical characteristics. Log transformation, normalization, and binning are just a few examples.

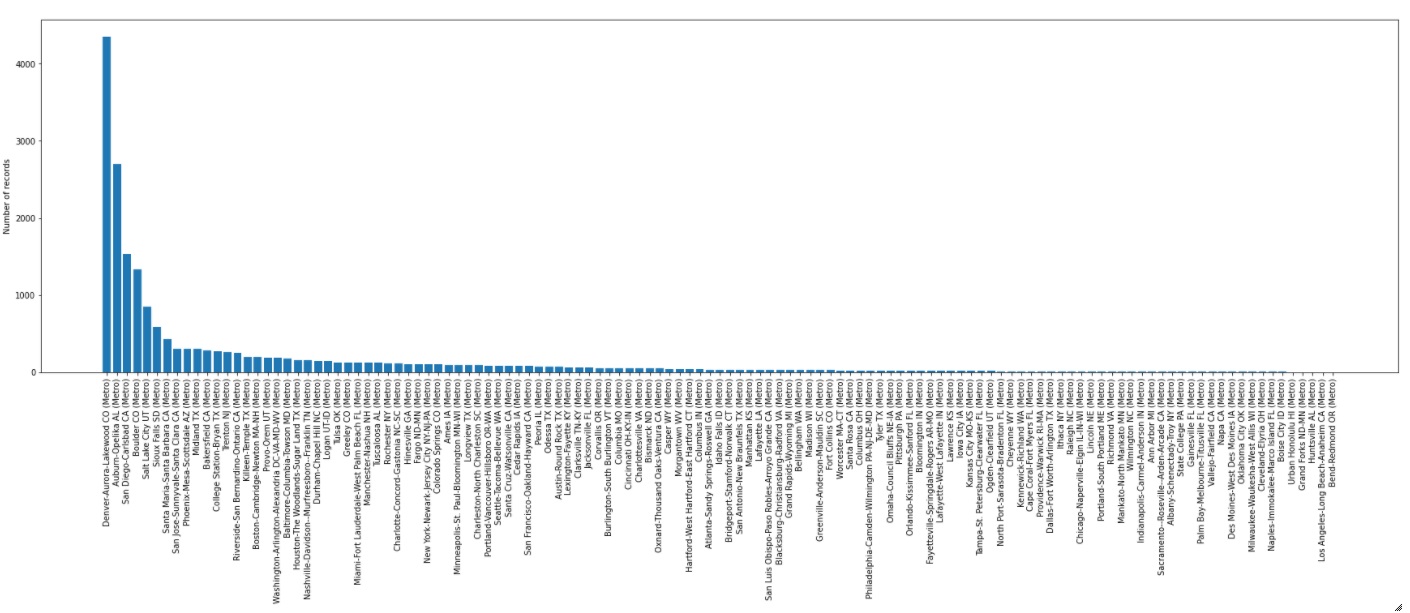

Features of a categorical nature with a high cardinality have many possible values. Categorical features with a high cardinality include product IDs, zip codes, and names. These features can generate several dummy variables, which can impact model efficiency by requiring more storage space. They can also provide challenges for data encoding. The cardinality of such traits can become prohibitively large, thus it is typically necessary to group them into fewer groups according to some criteria or logic. That is to say, we need to limit the number of permutations of these characteristics.

When Organized By Frequency

By clustering variables together in terms of their frequency (how often each characteristic appears in the data set), we can minimize the cardinality of high-cardinality categorical features. If we have a feature called city with hundreds of possible values, we might group them into ten or so categories, such as the most important cities, secondary cities, and the unknown category. This lets us capture the most common or crucial values while reducing the feature’s dimensionality.

Conclusion

We can reduce the cardinality of high-cardinality categorical features by grouping them according to the target, or the outcome variable we wish to predict or explain. For instance, if we have a feature named customer that contains millions of individual values, we can classify those customers into a smaller number of groups depending on their activities or replies, such as their rate of churn, their loyalty score, or the frequency with which they make purchases. By doing so, we may reduce the background noise of the feature while still retrieving its most informative or predictive values.